Project Overview

This project leverages computer vision and deep learning to assist with sign language communication by identifying hand gestures through a webcam. The AI system is capable of real-time detection and classification of various sign language gestures, making it a breakthrough tool for bridging communication gaps.

Features

- Collect and preprocess sign language gesture images.

- Train a deep learning model for gesture recognition.

- Evaluate model performance using classification reports and confusion matrices.

- Real-time gesture detection and classification using a webcam.

- Visualize training progress and analyze dataset properties.

Project Structure

ai_sign_detector/

│

├── data/ # Dataset folder

│ ├── raw/ # Raw collected images or datasets

│ ├── processed/ # Preprocessed images (resized, normalized)

│ ├── landmarks/ # Extracted hand landmarks (optional)

│ ├── train/ # Training set

│ ├── val/ # Validation set

│ └── test/ # Test set

│

├── models/ # Saved models and checkpoints

│ ├── sign_model.h5 # Final trained model

│ └── checkpoints/ # Intermediate checkpoints during training

│

├── scripts/ # Python scripts for the project

│ ├── collect_data.py # Script to collect and save images

│ ├── preprocess_data.py # Preprocessing pipeline (resize, normalize)

│ ├── train_model.py # Model training script

│ ├── evaluate_model.py # Script for testing and evaluation

│ ├── real_time_detect.py # Real-time sign recognition

│ └── utils.py # Utility functions (e.g., data loading, visualization)

│

├── notebooks/ # Jupyter notebooks for prototyping

│ ├── data_exploration.ipynb

│ ├── model_training.ipynb

│ └── evaluation.ipynb

│

├── logs/ # Training logs for debugging and monitoring

│ ├── tensorboard/ # TensorBoard logs

│ └── training_logs.txt # Custom log files

│

├── outputs/ # Outputs generated by the model

│ ├── predictions/ # Predicted results (e.g., images with overlays)

│ └── charts/ # Performance charts (loss, accuracy)

│

├── requirements.txt # List of required Python libraries

├── README.md # Overview and instructions for the project

└── .gitignore # Files and folders to ignore in version control

Installation

Prerequisites

- Python 3.8 or later

- A webcam (for real-time detection)

- GPU (optional but recommended for training)

Setup Instructions

-

Clone the repository:

git clone https://github.com/timothyroch/ai_sign_detector.git cd ai_sign_detector

-

Install dependencies:

pip install -r requirements.txt

-

Create necessary directories:

mkdir -p data/raw data/processed data/train data/val data/test models/checkpoints logs/tensorboard outputs/predictions outputs/charts

Usage

-

Collect Data

python scripts/collect_data.py

-

Preprocess Data

python scripts/preprocess_data.py

-

Train the Model

python scripts/data_split.py python scripts/train_model.py

-

Evaluate the Model

python scripts/evaluate_model.py

-

Real-Time Detection

python scripts/real_time_detect.py

Visual Analysis of Model Performance and Predictions

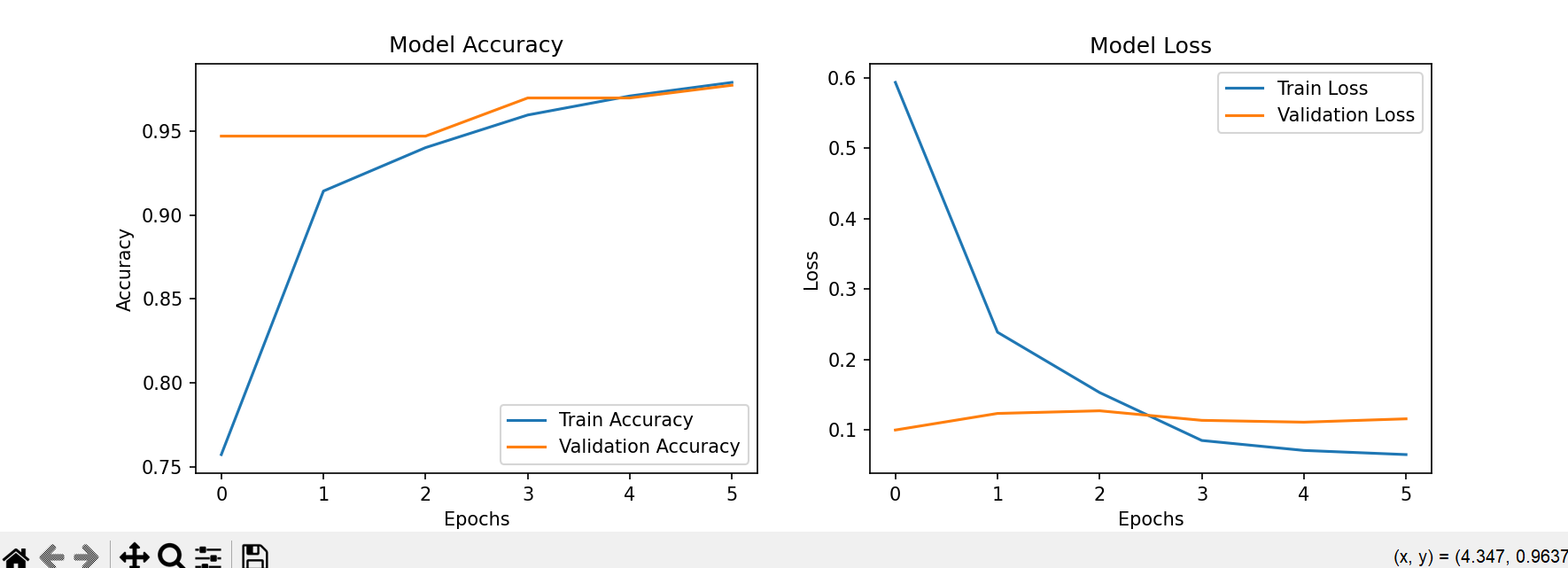

Training Accuracy and Loss: Graphs displaying model performance over epochs.

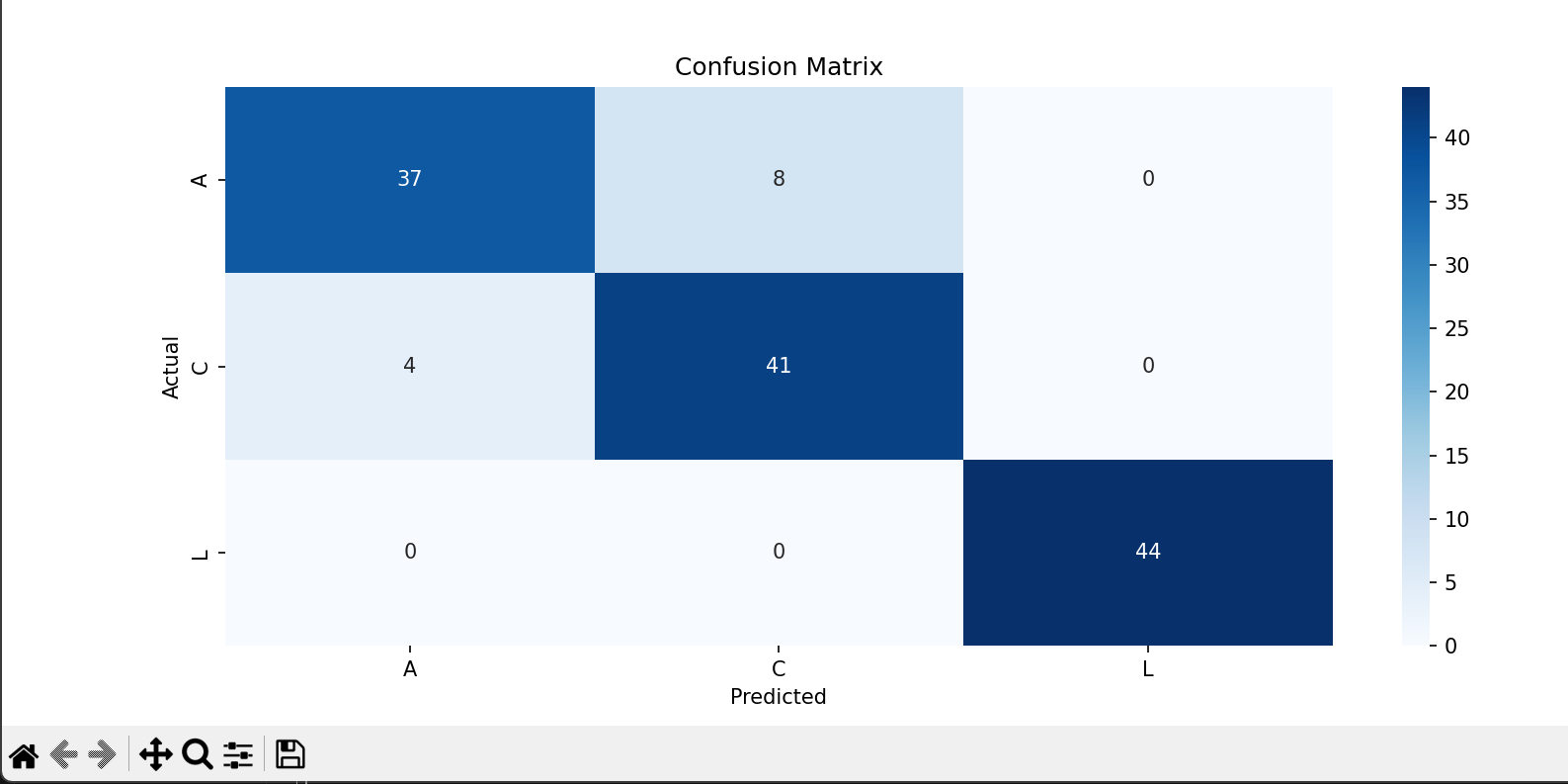

Confusion Matrix: A confusion matrix from TensorFlow shows the performance of a classification model by displaying true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN).

Results

Final Accuracy: 97% on validation data.

Supported Gestures: 'A', 'B', 'C', 'D' (expandable to more gestures).

Contributing

Contributions are welcome! Follow these steps:

- Fork the repository.

- Create a new branch (

git checkout -b feature-branch). - Commit your changes (

git commit -m "Add feature"). - Push the branch (

git push origin feature-branch). - Submit a pull request.

License

This project is licensed under the MIT License. See the LICENSE file for more details.

Contact

For questions or feedback:

- Email: timothyroch@gmail.com

- GitHub: timothyroch